Still working. through the examples.

Project is at https://github.com/chriseyre2000/absinthe_demo

Currently at df7c52f

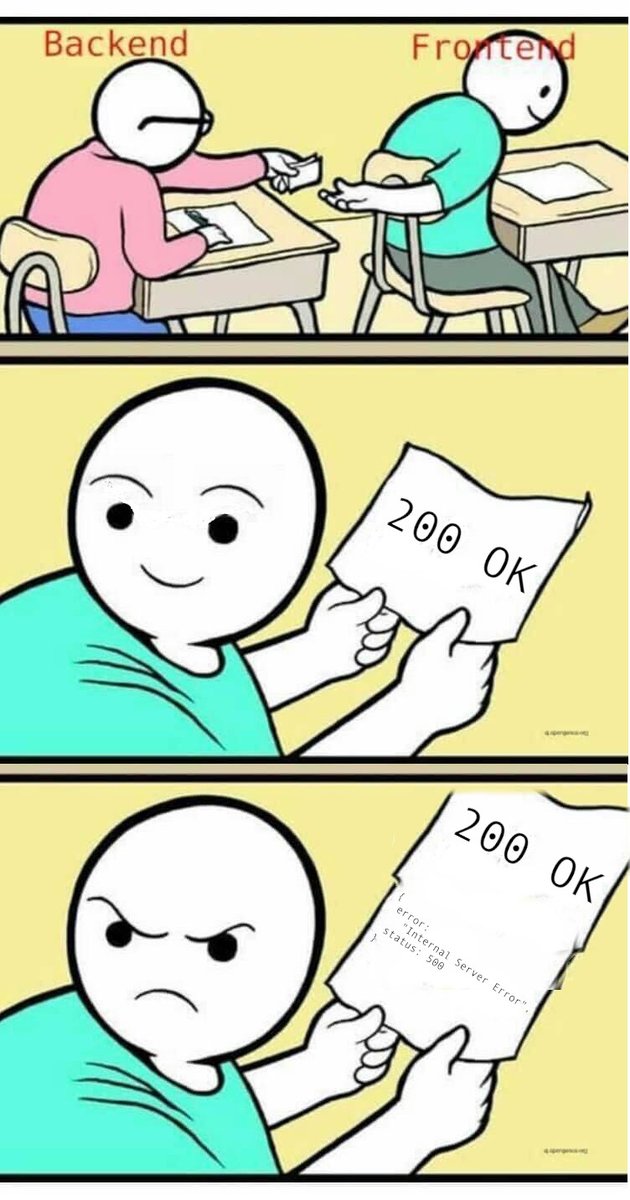

Again there is a minor difference in the error message format, and the error is a 200 not a 400.

It’s interesting to see custom modification.

Now at the end of chapter 3

Chapter 4 introduces some useful extra structure, allowing the schema to be broken down into manageable pieces.

The next step will cover unions.