Over the last few years I have have been working with software almost exclusively in the cloud. There has not been a single local server running. In order to do this we use an array of cloud services:. Databases, Content Management Systems, Content Delivery Networks.

By using these it is possible to operate.a system at scale without costing the earth or having a huge development team. You do need a development team as things will always change.

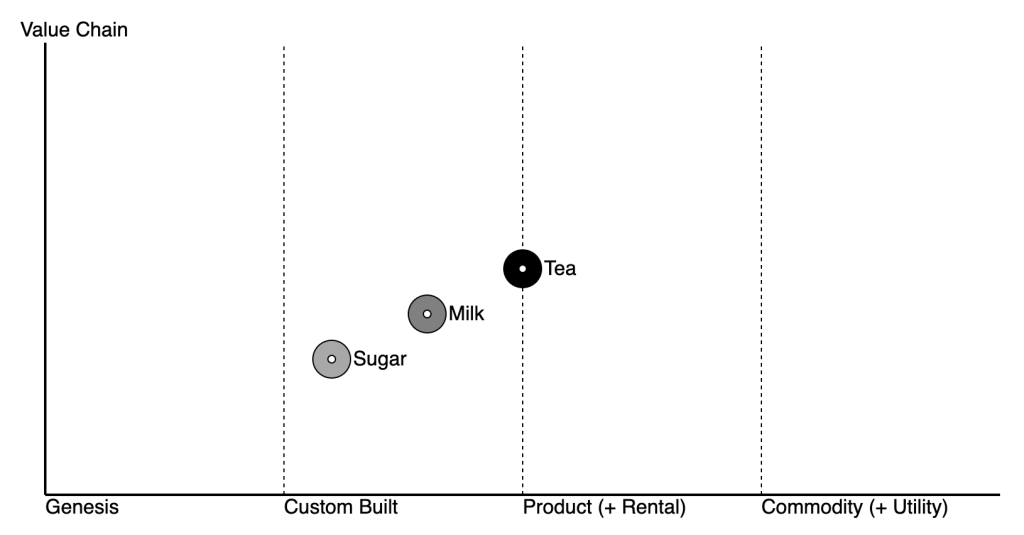

With enough items in your supply chain (you do know your own supply chain?) you will find in any given year at least one of the providers will decide that they don’t want to (or show that they can’t) run a stable reliable service. Sometimes this is an attempt to move you to another service. Sometimes it is no longer viable for them to run it.

You have a choice at this point. Do you move to a different provider or do you build it yourself? If you do move to a different provider how similar is it? I had one case of moving a MongoDB to an AWS MongoDB compatible service. In this instance the AWS Product had the same API for entering data but had vastly different performance characteristics and in one specific case could not store some data that existed in the old system. This is fun when migrating a database and getting “sorry I can’t store that due to an embedded unprintable character”.

When you choose a vendor keep the analysis of the second and third choices. They may be needed later. Always plan an exit strategy. Those fancy platform specific features that you like so much can be a real pain when the next platform does not have them.

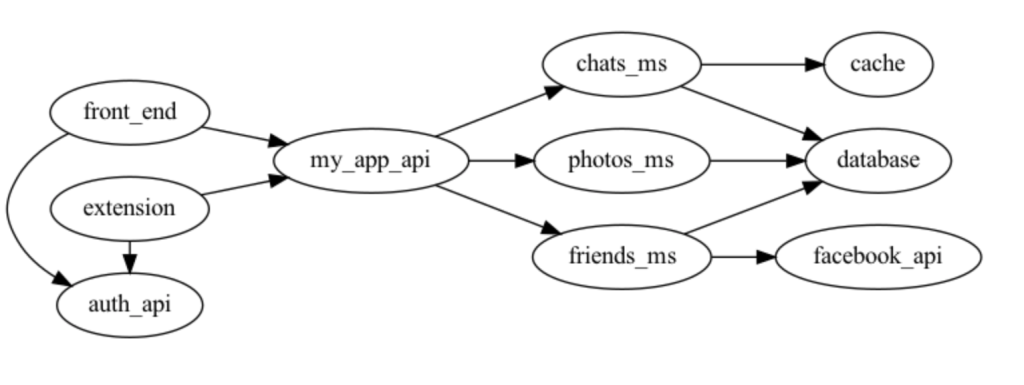

Building it yourself may be an option, but you are now taking on complexities that you didn’t previously have.